Neural Networks and Deep Learning

Posted on January 09, 2018 in course

After my first semester of my Phd program, I decided to relax a bit with my girlfriend and her family and also work in other stuff besides my research and my doctoral program. One of the goals which I proposed was to take the first course of Deep Learning Specialization of Andrew Ng. If you are familiar with my old webpage, I have been talking a little bit about some extra courses I had taken in the past.

For this break I took a course called Neural Networks and Deep Learning which you can access here.

I believe I reach the goal of this course, which was to understand how we can apply Deep Leaning in a variety tasks. Also, I could build, train and apply Deep Neural Networks using Python which also propomoted a nice overview about neural networks architecture.

Week 1

The fist week Andrew Ng gives a brief introduction about Deep Learning which is a branch of Machine Learning. In this study we can use a supervised or unsupervised learning. Dr. Ng gives several examples to illustrated types of applications and its categories.

Here is some materials and notes:

- What is neural network?

- Supervised learning for Neural Network

- Why is deep learning taking off?

Also, in the first week Andrew Ng interview Geoffrey Hinton (http://www.cs.toronto.edu/~hinton/) who is a pioner in Artificial Neural Networks.

Week 2

In the week 2 I was able to build the first image recognition algorithm using logistic regression which can recognize cats with 70% accuracy.

Interpretation:

- Different learning rates give different costs and thus different predictions results.

- If the learning rate is too large (0.01), the cost may oscillate up and down. It may even diverge (though in this example, using 0.01 still eventually ends up at a good value for the cost).

- A lower cost doesn't mean a better model. You have to check if there is possibly overfitting. It happens when the training accuracy is a lot higher than the test accuracy.

- In deep learning, we usually recommend that you:

- Choose the learning rate that better minimizes the cost function.

- If your model overfits, use other techniques to reduce overfitting. (We'll talk about this in later videos.)

Week 3

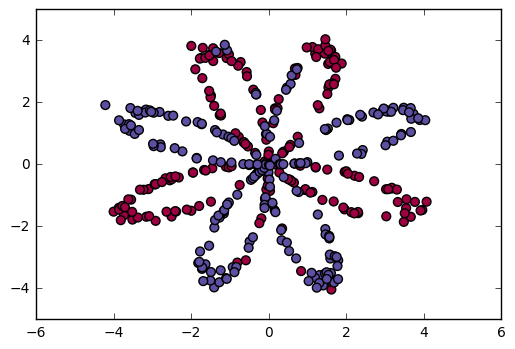

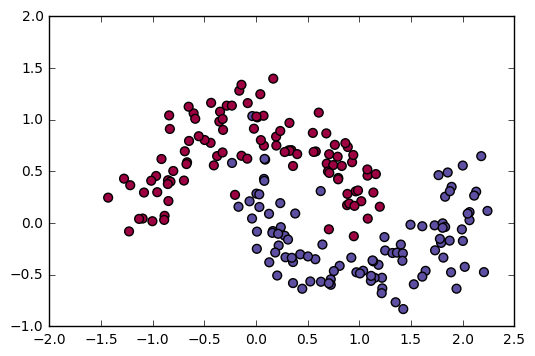

In the week 3 programming assignment I could build my first neural network with a single hidden layer to generate red and blue points to form a flower and then fit a neural network to correctly classify the points.

Interpretation:

- The larger models (with more hidden units) are able to fit the training set better, until eventually the largest models overfit the data.

- The best hidden layer size seems to be around n_h = 5. Indeed, a value around here seems to fits the data well without also incurring noticable overfitting.

- You will also learn later about regularization, which lets you use very large models (such as n_h = 50) without much overfitting.

Week 4

For the last week of course, I could build and train a Deep L-layer Neural Network to classify a Cat vs Non-cat images with 80% of accuracy.

References

Geoffrey Hinton Interview https://www.youtube.com/watch?v=-eyhCTvrEtE

Pieter Abbeel https://people.eecs.berkeley.edu/~pabbeel/

http://ufldl.stanford.edu/tutorial/supervised/MultiLayerNeuralNetworks/